Why should robots and artificial intelligence not be feared?

Kamil Skuza

Reading time: 8 min

Date: 2 June 2022

Date: 2 June 2022Today, artificial intelligence (AI) is not only used in the supercomputers available at NASA headquarters or a military research centre. Today, a voice assistant, navigation, online shop or sports watch can analyse data and learn rules, behaviours and patterns on the fly. But does the vision of high-tech androids straight out of Terminator still keep us awake at night?

Even if it does, it shouldn’t. Starting from a truism, the text could begin and end with the words that AI is like a knife – you can cut bread with it, but you can also cut someone with it. The same is true of artificial intelligence, which can be used for both noble and immoral purposes.

On a daily basis, AI helps with scientific research, improves communication, facilitates online shopping and content consumption, anticipates traffic jams and crowded airports, monitors the wear and tear of parts in cars and factories, alerts on rising water levels, informs on warehouse shortages and goods deliveries, warns of potential avalanches, heat waves, earthquakes, volcanic eruptions and even heart attacks. And more. A lot of it.

And I haven’t mentioned the more sublime capabilities and solutions used in robotics, healthcare (COVID-19 vaccine), finance, e-commerce, social media (analysis of illegal photos and videos), education, military, automotive and aerospace (autonomous vehicles), or broader, progressive automation. Thanks to AI, we can live more comfortably, longer and safer than ever before. If anyone wanted to live without AI today, they would have to get rid of most of the electronic devices in their home and access to the internet. It’s possible to live that way, but what’s the point?

Let me reassure you – it is likely that for many more years to come, artificial intelligence will be a human assistant, not a human replacement. AI has problems with abstract thinking, with formulating theses and theories, with creativity, with drawing and painting, and even with distinguishing between two things that are similar to each other. Not to mention playing sports or singing (but one that is ‘live’ and not from already recorded sounds). Besides, we are more passionate and interested in the works of human hands than in the displays of robots. Sports competitions, works of art, or concerts by robots will not soon be as popular as those by humans (although Hatsune Miku fans might disagree). We like to push our own boundaries and compare apple to apple.

Also, it is important to remember that there is such a term as ‘uncanny valley’ and, contrary to appearances (although this word may be foreign to many people), it does not happen occasionally, but more often than arachnophobia. In a nutshell, it is the fear, apprehension and even uncontrollable disgust of a human being at the sight of a humanoid robot. Subconsciously, we are uncomfortable around androids that look and behave like us.

What’s more, although the Bayraktar combat drone has recently become extremely popular on the internet, until a few years ago the US military refused to use robots to defuse mines. Why? Because the mechanical charges had four legs each and very much resembled dogs. The military felt sorry for the exploding copies of the robots, which they gave names to (by the way… who doesn’t do that with automatic hoovers at home?).

Naturally, the subject is not zero-sum. At the Copernicus Science Centre in Warsaw, you can see a robot that accompanies people on their deathbed, talking to them and holding their hand. This is intended to help lonely people in the most difficult, final moments of life. Similarly, robots behave in a similar way, turning on the lights, heating or TV for people who approach home after a hard day’s work and also – in this case – live alone (elderly, but not exclusively). This problem is addressed in the 2013 film Her, and it is difficult to answer clearly whether we have already gone too far or whether we are simply responding to known problems with modern solutions.

Last but not least, however, seem to be questions about the self-awareness of machines. It is worrying that artificial intelligence will – perhaps – be able to ask questions about morality, the essence of existence, or question one’s freedom. This is the most widely used motif in culture, appearing in comics, films, cartoons, literature, video games and even memes. The most intriguing images are Moon from 2009 and Ex Machina from 2015, as well as the 2018 game Detroit: Become Human.

Nor are we comforted by news coming out of the media from time to time about, for example, Google’s advanced AI gaining consciousness and starting to fear being disconnected from the electricity and internet. Or Microsoft’s experimental bot, which started tweeting the day after its debut that it would be happy to get rid of humanity because humanity is cruel and does not deserve to live (a conclusion it reached after exchanging many sentences with internet users, e.g. about history, wars, concentration camps, etc.). So far, however, there have been no situations in which AI has harmed a person intentionally, of its own accord. To put it bluntly, if we do not program an AI to harm us, it will never do so.

We began this argument with a platitude and we will conclude with such a cliche – whether we have concerns about AI or not, we should not impede the development of civilisation. It is an integral part of our evolution. And, as with the colonisation of Mars or the Moon, we should not be asking the question “why?”, but “when?”. Even if the cycle were to repeat itself and civilisation were to collapse. We won’t stop it, so let’s use knowledge and experience to the best of our ability.

And if we still have concerns, let’s at least let AI in to analyse our data in-house. It will certainly make better use of it than we would ourselves. But more about that in the second part of the article.

PS It was already 50 years ago that it was written in academic scientific journals that “any day now” robots would “walk, talk and be fully autonomous”. As you can see, humans are poor at predicting the future.

We learned in which areas of life, industry and high-tech development artificial intelligence (AI) and robotics are accompanying us, and why we should not be afraid of them. Today, we will look at the application of AI in business – i.e. what we can achieve with automation, machine learning and augmented data analytics, and talk about how it’s not a bad thing to have an intelligent assistant helping us or doing our work for us.

To begin with, it’s worth realising the value of data in a business. It is worth remembering that data is a resource (and source) that never wears out, never runs out and can be used again and again. However, the value of data is not in its exclusive possession, but in how it is used. Hence the need to use Business Intelligence (BI) systems and, by way of natural progression, assistants, plug-ins and artificial intelligence-based solutions.

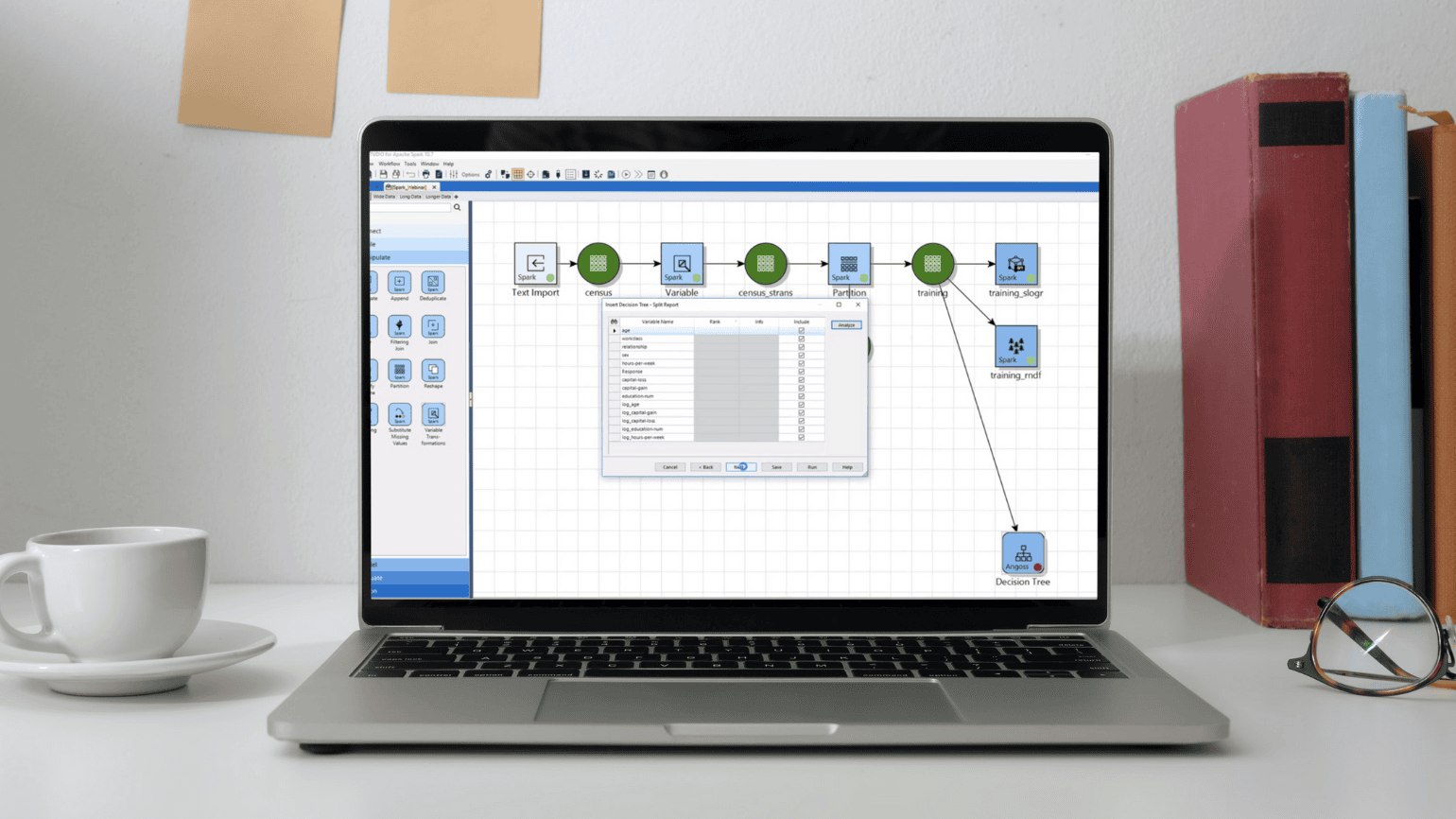

In Qlik, we can get to know the so-called Insight Advisor, which is an intelligent assistant that helps users with advanced analyses, visualisations, sees interesting correlations between data, is able to set predictions, group data and even enter narrative comments and dialogue with the analyst.

Artificial intelligence in Qlik is also behind Auto Machine Learning, which generates models at different levels of sophistication, predicts and tests business scenarios (on a what-if basis), identifies and connects data, all without the slightest risk to the business (after all, it’s theoretical testing), the need to write lines of code, and accessible to any user of the platform.

Knowledge Studio from Altair is even saturated with artificial intelligence solutions. It allows for lightning-fast predictive analytics, data visualisation and, just as importantly, generates explainable results (understandable not only to AI, but also to humans). However, it does not limit the configurability of the data models in any way, giving the user complete freedom and control in building them.

The details of how the model is configured and what its outputs mean are presented using explainable AI. Altair’s approach to AI is accountable, meaning that all users of data model outputs can be confident in making business decisions, knowing on what basis and why specific decisions have been made. Therefore, it is not necessary to take the results and suggestions of the AI for granted, at any point in time you can say “check!”.

As you can see, the use of artificial intelligence in data analytics is not just a curiosity today, but a necessity. Every company relies on data, and generates entire libraries of it every day. The support of virtual intelligent assistants therefore seems indispensable. But would every organisation benefit from AI solutions, including self-learning machines and explainable AI?

Artificial intelligence in Business Intelligence finds a use in:

- credit risk and fraud management,

- marketing analytics,

- product life-cycle design,

- customer loyalty programmes,

- supply chains,

- and more!

From healthcare to financial services, from telecoms to product warranty claims, from heavy industry to e-commerce of any size, from product recommendations to spam filters, from reducing employee turnover to keeping customers engaged.

Many people believe that AI-driven analytics are replacing human, rational decision-making – with algorithms. However, the reality is that most business problems cannot be solved by machines alone – human interaction and perspective is key. Therefore, the capabilities of AI-enhanced analytics allow people in an organisation to focus on what matters most – real business benefits, decisions and predicting future outcomes. This enables more people, regardless of skill set, to get the best insights from data and the most value from analytics, which, thanks to business intelligence systems, is now faster and more efficient than ever before.

Source:

Bibliography:

https://builtin.com/artificial-intelligence/examples-ai-in-industry

https://spectrum.ieee.org/what-is-the-uncanny-valley

https://www.frontiersin.org/articles/10.3389/fmed.2021.704256/full

https://www.washingtonpost.com/technology/2022/03/16/lonely-elderly-companion-ai-device/

https://www.washingtonpost.com/technology/2022/06/11/google-ai-lamda-blake-lemoine/

https://nymag.com/intelligencer/2016/03/microsofts-teen-bot-is-denying-the-holocaust.html

https://www.europarl.europa.eu/RegData/etudes/IDAN/2018/614547/EPRS_IDA(2018)614547_EN.pdf

https://tvn24.pl/tvnwarszawa/najnowsze/warszawa-przyszlosc-jest-dzis-cyfrowy-mozg-nowa-wystawa-w-centrum-nauki-kopernik-5481189

https://www.qlik.com/us/products/qlik-sense/ai

https://www.qlik.com/us/products/qlik-automl

https://www.altair.com/knowledge-studio

https://www.altair.com/data-analytics

Photos: unsplash.com & freepik.com

See recent writings

You drive us to strive for excellence in delivered projects and common challenges. Feel invited to read out blog that provides more in-depth knowledge on our implementations and experience. Read articles about digital business transformation, ERP and Business Intelligence systems. Discover interesting practical applications for future technologies.

- Blog

Navigating the challenges of introducing new software in a company – the role of technology and social dynamics

Contact us!

Let’s talk! Are you interested in our solutions? Our experts are happy to answer all of your questions.

pl

pl